I have been using this lighting setup for years now. So whenever I need to make a prototype or game scene in outdoors this is a lighting template I am using. So I thought someone may find this useful.

Scene uses 3 lights - 1 directional light for sun and 2 hemisphere lights for sky color and reflected ground color. Hemisphere (Ancient Greek: half of a sphere) light is same Half Lambert. It is a perfect solution to imitate sky color and indirect lighting that comes from surfaces lit by the Sun.

float halfLambert(vec3 N, vec3 L)//N, L = Normal and Light vectors

{

retun max(0.0,dot(N,L)*0.5+0.5);

}

which, by the way is developed by Valve for Half-Life.

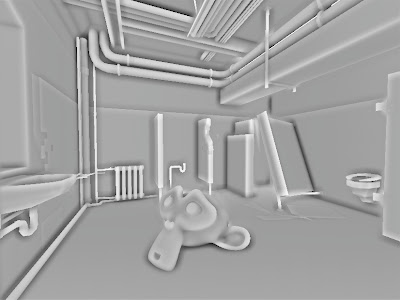

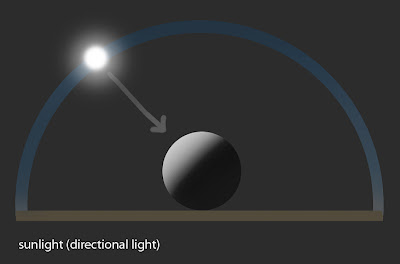

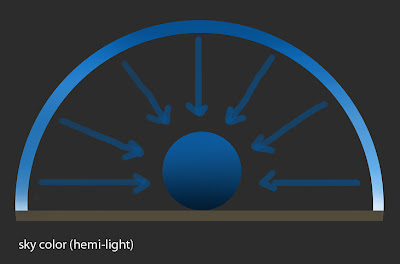

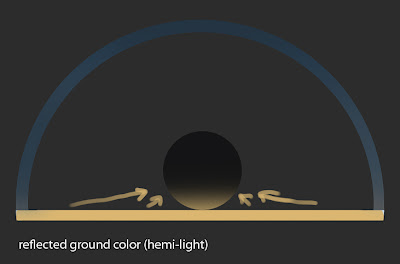

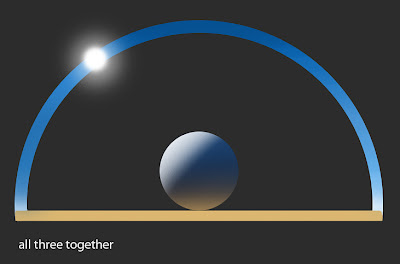

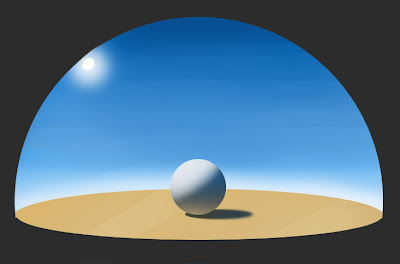

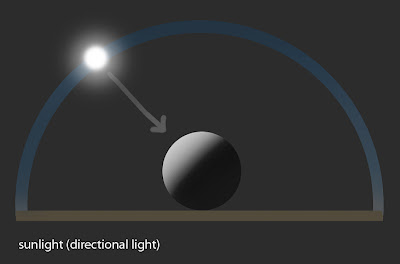

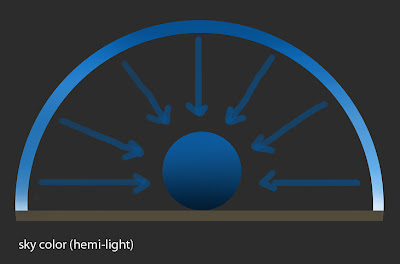

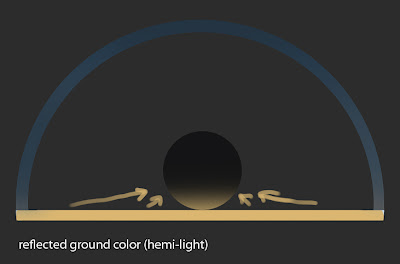

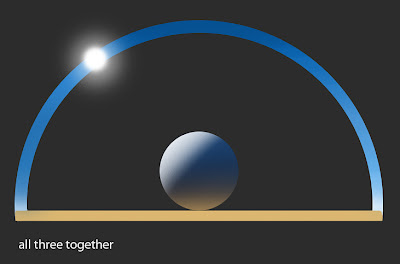

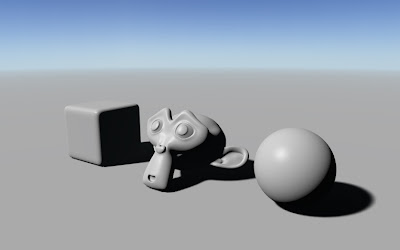

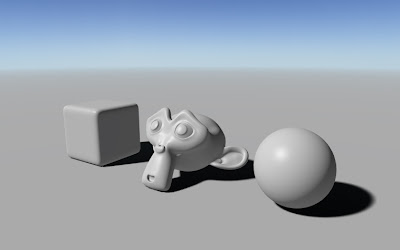

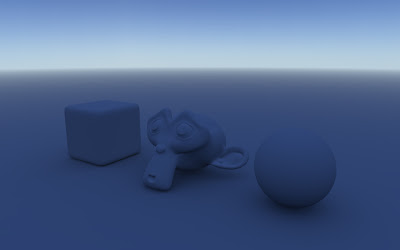

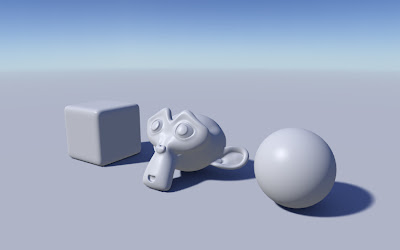

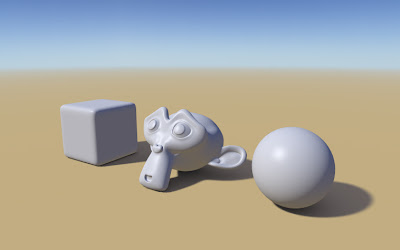

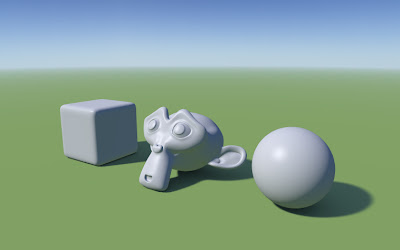

here are some illustrations:

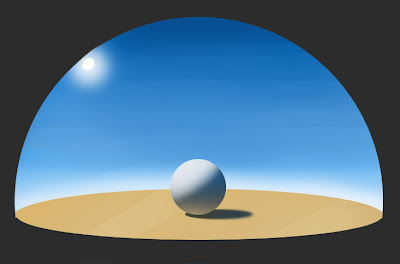

this is an example scene - clear, blue skydome, Sun somewhere in middle between horizon and zenith and sand or dirt as the ground plane.

Sun. A directional light, lamp intensity is 1.0 and color is white.

Sky color. The whole skydome emits a lot of light, in our case, blueish. Hemi-light is pointing down .I usually use lamp intensity 0.2 and color (Red 0.0, Green 0.2, Blue 1.0)

Reflected ground color. It is an indirect light and the intensity and color depends on the surface color. Hemi-light is pointing up. Here we have light orangeish color as a sand or dirt would have.

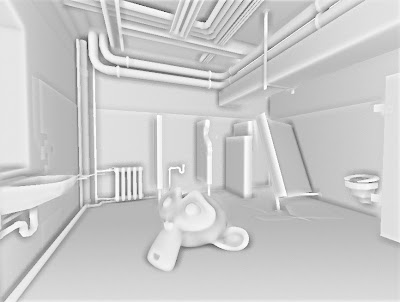

here we have all three lights combined together.

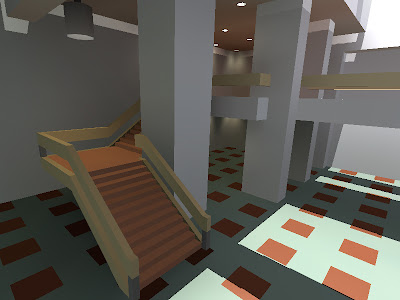

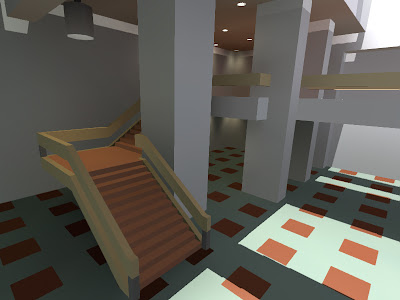

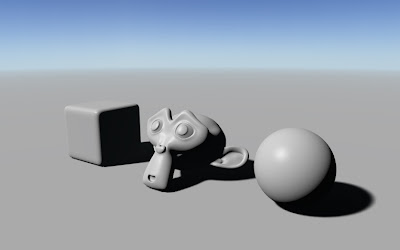

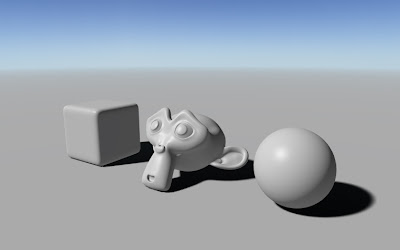

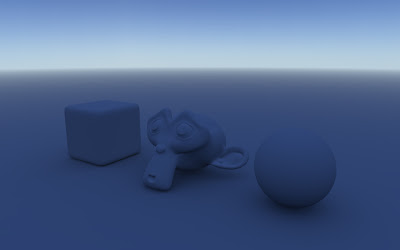

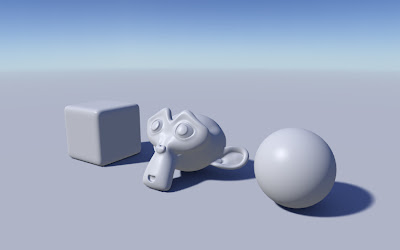

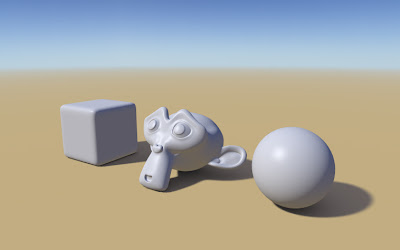

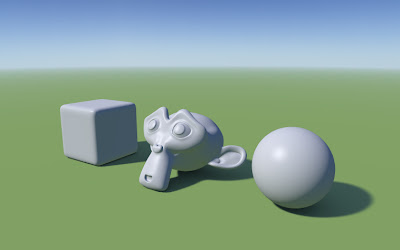

some screenshots from Blender viewport:

sunlight only (shadows on ground are baked in a texture)

sunlight and reflected ground color

sky color only (ambient occlusion on ground is baked in a texture)

full lighting model

dirt

grass

blend file:

HERE